- #PLAGIARISM CHECKER BETWEEN TWO DOCUMENTS INSTALL#

- #PLAGIARISM CHECKER BETWEEN TWO DOCUMENTS PLUS#

- #PLAGIARISM CHECKER BETWEEN TWO DOCUMENTS ZIP#

- #PLAGIARISM CHECKER BETWEEN TWO DOCUMENTS DOWNLOAD#

Print ( 'Comparing Result:', sims ) # calculate sum of similarities for each query doc Query_doc_tf_idf = tf_idf # print (document_number, document_similarity) doc2bow ( query_doc ) # find similarity for each document

#PLAGIARISM CHECKER BETWEEN TWO DOCUMENTS INSTALL#

Another important benefit with gensim is that it allows you to manage big text files without loading the whole file into memory.įirst, let's install nltk and gensim by following commands:įor line in file2_docs : # tokenize words

#PLAGIARISM CHECKER BETWEEN TWO DOCUMENTS PLUS#

But the width and scope of facilities to build and evaluate topic models are unparalleled in gensim, plus many more convenient facilities for text processing. Topic models and word embedding are available in other packages like scikit, R etc.

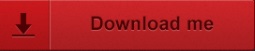

It is a leading and a state-of-the-art package for processing texts, working with word vector models (such as Word2Vec, FastText etc) But it is practically much more than that. Gensim is billed as a Natural Language Processing package that does ‘Topic Modeling for Humans’. It contains text processing libraries for tokenization, parsing, classification, stemming, tagging and semantic reasoning. NLTK also is very easy to learn, actually, it’ s the easiest natural language processing (NLP) library that we are going to use. Natural language toolkit (NLTK) is the most popular library for natural language processing (NLP) which was written in Python and has a big community behind it. Feel free to contribute this project in my GitHub. Let's start with the base structure of program but then we will add graphical interface to making the program much easier to use. This post originally published in my lab Reverse Python. We will learn the very basics of natural language processing (NLP) which is a branch of artificial intelligence that deals with the interaction between computers and humans using the natural language. In this post we are going to build a web application which will compare the similarity between two documents. Youtube Channel with video tutorials - Reverse Python Youtube index (( student_a, text_vector_a )) del new_vectors for student_b, text_vector_b in new_vectors : sim_score = similarity ( text_vector_a, text_vector_b ) student_pair = sorted (( student_a, student_b )) score = ( student_pair, student_pair, sim_score ) plagiarism_results.

#PLAGIARISM CHECKER BETWEEN TWO DOCUMENTS ZIP#

toarray () similarity = lambda doc1, doc2 : cosine_similarity () vectors = vectorize ( student_notes ) s_vectors = list ( zip ( student_files, vectors )) def check_plagiarism (): plagiarism_results = set () global s_vectors for student_a, text_vector_a in s_vectors : new_vectors = s_vectors. Import os from sklearn.feature_extraction.text import TfidfVectorizer from import cosine_similarity student_files = student_notes = vectorize = lambda Text : TfidfVectorizer (). The project directory should look like this

#PLAGIARISM CHECKER BETWEEN TWO DOCUMENTS DOWNLOAD#

txt, If you wanna use sample textfiles I used for this tutorial download here The text files need to be in the same directory with your script with an extension of. Here we gonna use the basic concept of vector, dot product to determine how closely two texts are similar by computing the value of cosine similarity between vectors representations of student’s text assignments.Īlso, you need to have sample text documents on the student’s assignments which we gonna use in testing our model. How do we detect similarity in documents? we going to use scikit-learn built-in features to do this. The vectorization of textual data to vectors is not a random process instead it follows certain algorithms resulting in words being represented as a position in space. The process of converting the textual data into an array of numbers is generally known as word embedding. We all know that computers can only understand 0s and 1s, and for us to perform some computation on textual data we need a way to convert the text into numbers. Enter fullscreen mode Exit fullscreen mode

0 kommentar(er)

0 kommentar(er)